Multicast over frame-relay can be tricky when you try to run it over a hub and spoke topology. In a previous lesson, I described the issue when you are using auto-rp and your mapping agent is behind a spoke. This time we’ll take a look at PIM NBMA mode.

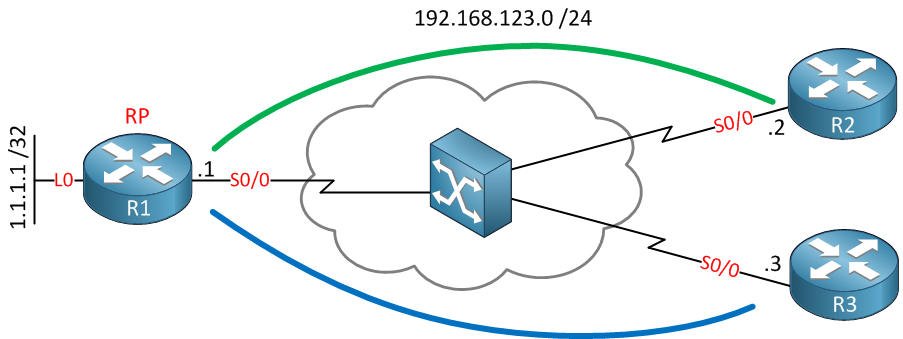

Let me show you the topology that I will use to explain and demonstrate this to you:

Above, you see 3 routers. R1 is the hub router, and R2 and R3 are my spokes. We are using point-to-multipoint frame-relay, so there is only a single subnet. R1 is also the RP (Rendezvous Point)

PIM treats our frame-relay network as a broadcast media. It expects that all routers can hear each other directly. This, however, is only true when we have a full mesh…using a hub and spoke topology like the network above, this doesn’t apply because there is only a PVC between the hub and spoke routers. The spoke routers cannot reach each other directly. They have to go through the hub router.

This causes some issues. First, whenever a spoke router sends a multicast packet, it will be received by the hub router, but the hub router doesn’t forward it to other spoke routers because of the RPF rule (never send a packet out of the interface you received it on). One of the methods of dealing with this problem is by using point-to-point sub-interfaces, as it solves the split horizon problem.

The other problem is that spoke routers don’t hear each other’s PIM messages. For example, let’s say that R2 and R3 are both receiving a certain multicast stream. After a while, there are no users behind R2 that are interested in this stream, and as a result, R2 will send a PIM prune message to R1.

If R3 still has active receivers, it normally sends a PIM override message to let R1 know that we still want to keep receiving the multicast stream. R1, however, assumes that all PIM routers hear the prune message from R2, but this is not the case in our hub and spoke topology…only the hub router has received it, and R3 never heard this PIM prune message. As a result, R1 will prune the multicast stream, and R3 will not receive anything anymore…

PIM NBMA mode solves these issues that I just described to you. Basically, it will tell PIM that the frame-relay network should be treated as a collection of point-to-point links, not as a multi-access network. Let’s look at the example above and configure it so you can see how it works.

OSPF has been configured to advertise the loopback0 interface of R1 so that we can use it as the IP address for the RP. Let’s start by enabling PIM on the interfaces:

R1(config)#interface serial 0/0

R1(config-if)#ip pim sparse-modeR2(config)#interface serial 0/0

R2(config-if)#ip pim sparse-modeR3(config-if)#interface serial 0/0

R3(config-if)#ip pim sparse-modeThis will activate PIM on all serial interfaces. Let’s verify that we have PIM neighbors:

R1#show ip pim neighbor

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

S - State Refresh Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

192.168.123.3 Serial0/0 00:03:51/00:01:21 v2 1 / DR S

192.168.123.2 Serial0/0 00:04:04/00:01:35 v2 1 / SThat’s looking good. Now let’s configure the RP:

R1(config)#ip pim rp-address 1.1.1.1R2(config)#ip pim rp-address 1.1.1.1R3(config)#ip pim rp-address 1.1.1.1I will use a static RP as it saves the hassle of configuring auto-RP and a mapping agent. Let’s configure R3 as a receiver for the 239.1.1.2 multicast group address. I will use R2 as a source by sending pings:

R3(config-if)#ip igmp join-group 239.1.1.2R2#ping 239.1.1.2 repeat 9999

Type escape sequence to abort.

Sending 9999, 100-byte ICMP Echos to 239.1.1.2, timeout is 2 seconds:

.....As you can see, nothing, no packets are arriving. Let’s take a closer look to see what is going on:

R3#show ip mroute 239.1.1.2

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.2), 00:01:40/00:02:27, RP 1.1.1.1, flags: SJPCL

Incoming interface: Serial0/0, RPF nbr 192.168.123.1

Outgoing interface list: NullR3 has registered itself at the RP but doesn’t receive anything.

R1#show ip mroute 239.1.1.2

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.2), 00:02:10/00:03:18, RP 1.1.1.1, flags: SJC

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/0, Forward/Sparse, 00:02:10/00:03:18

(192.168.123.2, 239.1.1.2), 00:01:38/00:02:00, flags: PJT

Incoming interface: Serial0/0, RPF nbr 0.0.0.0

Outgoing interface list: NullR1 receives traffic from R2 but doesn’t forward it out of the same interface to R3 (Serial0/0).

hey Rene ,great info! would the auto-rp mapping agent behind spoke problem that you mentioned in the previous post also get solved by configuring pim nbma-mode on r1 serial0/0?

Hi Rene,

Is the mapping agent behind spoke issue also can be solve by this command ip pim NBMA-mode ?

Davis

Hi Davis,

I’m afraid not. The AutoRP addresses 224.0.1.39 and 224.0.1.40 are using dense mode flooding and this is not supported by NBMA mode. If your mapping agent is behind a spoke router then you’ll have to pick one of the three options to fix this:

- Get rid of the point-to-multipoint interfaces and use sub-interfaces. This will allow the hub router to forward multicast to all spoke routers since we are using different interfaces to send and receive traffic.

- Move the mapping agent to a router above the hub router, make sure it's not behind a spoke router.

... Continue reading in our forumOk. Thanks Rene.

Davis

Hi Rene,

Could you please confirm if i need to use this ip pim nbma mode while i am running multicast in DMVPN hub and sopke topology.

Just like your topology R1 is Hub and R2, R3 are DMVPN spoke. I need to configured hub and spoke tunnel as ip ospf network point-to-miltipoint to match certain requirement. Do i need to use “ip pim nbma mode” here or not can you please tell me why not here (This DMVPN environment).