OSPF uses a simple formula to calculate the OSPF cost for an interface with this formula:

cost = reference bandwidth / interface bandwidthThe reference bandwidth is a value in Mbps that we can set ourselves. By default, this is 100Mbps on Cisco IOS routers. The interface bandwidth is something we can look up.

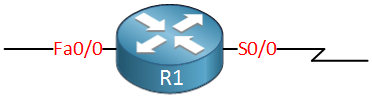

Let’s take a look at an example of how this works. I’ll use this router:

The router above has two interfaces, a FastEthernet and a serial interface:

R1#show ip interface brief

Interface IP-Address OK? Method Status Protocol

FastEthernet0/0 192.168.1.1 YES manual up up

Serial0/0 192.168.2.1 YES manual up upLet’s enable OSPF on these interfaces:

R1(config)#router ospf 1

R1(config-router)#network 192.168.1.0 0.0.0.255 area 0

R1(config-router)#network 192.168.2.0 0.0.0.255 area 0After enabling OSPF, we can check what the reference bandwidth is:

Router#show ip ospf | include Reference

Reference bandwidth unit is 100 mbpsBy default, this is 100 Mbps. Let’s see what cost values OSPF has calculated for our two interfaces:

Router#show interfaces FastEthernet 0/0 | include BW

MTU 1500 bytes, BW 100000 Kbit/sec, DLY 100 usecRouter#show ip ospf interface FastEthernet 0/0 | include Cost

Process ID 1, Router ID 192.168.1.1, Network Type BROADCAST, Cost: 1The FastEthernet interface has a bandwidth of 100,000 kbps (100 Mbps), and the OSPF cost is 1. The formula to calculate the cost looks like this:

100.000 kbps reference bandwidth / 100.000 interface bandwidth = 1What about the serial interface? Let’s find out:

R1#show interfaces Serial 0/0 | include BW

MTU 1500 bytes, BW 1544 Kbit/sec, DLY 20000 usec,R1#show ip ospf interface Serial 0/0 | include Cost

Process ID 1, Router ID 192.168.2.1, Network Type POINT_TO_POINT, Cost: 64The serial interface has a bandwidth of 1.544 kbps (1.5 Mbps) and a cost of 64. It was calculated like this:

100,000 kbps reference bandwidth / 1,544 kbps interface bandwidth = 64.76It was rounded down to 64.

The default reference bandwidth of 100 Mbps can cause issues if you use Gigabit or 10 Gigabit interfaces. The lowest possible cost value is one so with the default reference bandwidth, a FastEthernet, Gigabit, and 10 Gigabit interface would have an OSPF cost of 1.

If you use Gigabit interfaces (or 10 Gigabit), then it’s better to change the reference bandwidth. You can do it like this:

Router(config-router)#auto-cost reference-bandwidth ?

<1-4294967> The reference bandwidth in terms of Mbits per secondUse the auto-cost reference-bandwidth command and specify the value you want in Mbps. Let’s set it to 1000 Mbps:

Router(config-router)#auto-cost reference-bandwidth 1000

% OSPF: Reference bandwidth is changed.

Please ensure reference bandwidth is consistent across all routersCisco IOS will warn you that you should do this on all OSPF routers. Let’s verify our work:

Router#show ip ospf | include Reference

Reference bandwidth unit is 1000 mbpsOur reference bandwidth is now 1000 Mbps. Let’s see what the cost of our FastEthernet is now:

Router#show ip ospf interface FastEthernet 0/0 | include Cost

Process ID 1, Router ID 192.168.1.1, Network Type BROADCAST, Cost: 10

Topology-MTID Cost Disabled Shutdown Topology NameIt now has a cost of 10 which means that a Gigabit interface would end up with a cost of 1.

Configurations

Want to take a look for yourself? Here you will find the final configuration of R1.

well explained.

“It now has a cost of 1 which means that a Gigabit interface would end up with a cost of 1.”

Did you mean 10?

Oops yes Just fixed it! Thanks Scott.

Just fixed it! Thanks Scott.

check this command its not working

this one is working

Router#show ip protocols | include ReferenceHey Rene,

I’ve seen

auto-cost reference-bandwidthas well asreference-bandwidth.Is there any difference between those two commands or are they just IOS version dependent?

Thank you!