Lesson Contents

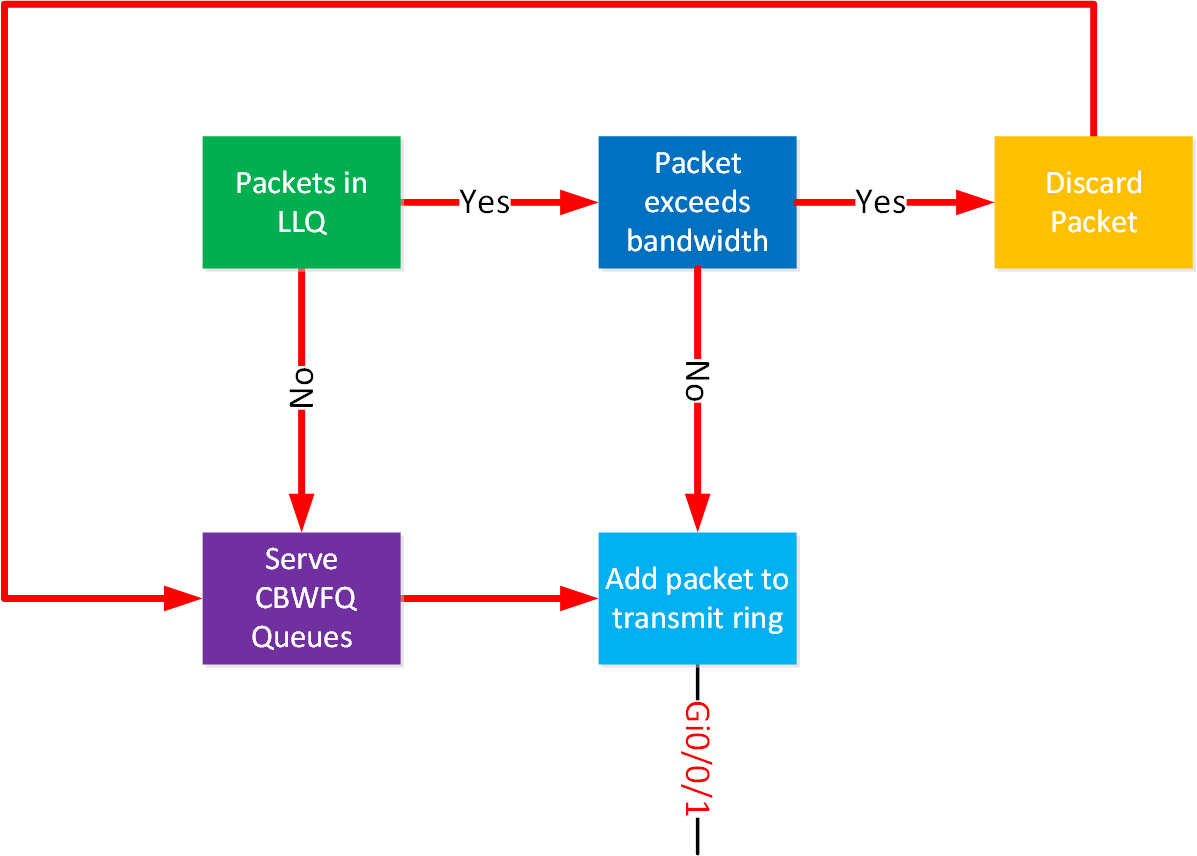

In the LLQ lesson, I explained that priority queue traffic is transmitted right away before dealing with any other CBWFQ queues. Here’s what it looks like:

What if you receive packets non-stop for the priority queue? Will this starve out other queues? Technically, it would be because the scheduler serves packets in the priority queue before dealing with any of the CBWFQ queues. The priority queue has a bandwidth limit to prevent this from happening, and it has to be policed. This is how it works:

When packets are in the priority queue, a policer checks whether they exceed the bandwidth. If not, we can send those packets to the transmit ring, and they are transmitted. If the packet in the priority queue does exceed the bandwidth, we discard the packet and continue with the CBWFQ queues.

We can test and verify this.

Configuration

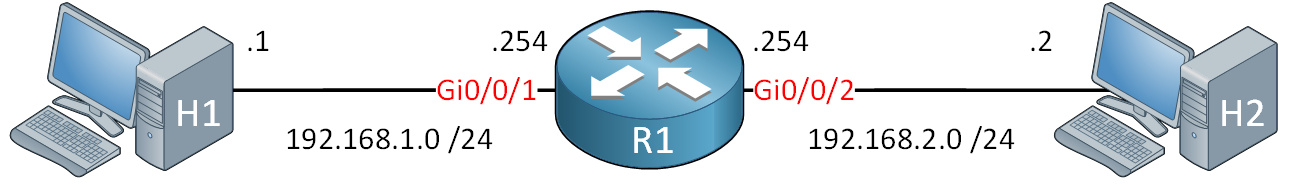

I’ll use the following topology:

We need only two Ubuntu hosts connected to a router. I’ll use H1 as an iPerf client and H2 as an iPerf server.

Configurations

Want to take a look for yourself? Here, you will find the startup configuration of R1.

R1

hostname R1

!

interface GigabitEthernet0/0/0

ip address 192.168.1.254 255.255.255.0

negotiation auto

!

interface GigabitEthernet0/0/1

ip address 192.168.2.254 255.255.255.0

speed 100

no negotiation auto

!

endI’m using a Cisco ISR4331 router running Cisco IOS XE Version 15.5(2)S3, RELEASE SOFTWARE (fc2).

I also reduced the speed of one of the router interfaces to create a bottleneck:

R1#show running-config interface GigabitEthernet 0/0/1

Building configuration...

Current configuration : 110 bytes

!

interface GigabitEthernet0/0/1

ip address 192.168.2.254 255.255.255.0

speed 100

no negotiation autoLet’s start the iPerf server on H2. I’ll start it on two different ports:

ubuntu@H2:~$ iperf3 -s -p 5201

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------ubuntu@H2:~$ iperf3 -s -p 5202

-----------------------------------------------------------

Server listening on 5202

-----------------------------------------------------------That’s all we have to do on the server. It will listen on these two ports.

Without Priority Queue

Let’s run some tests. We’ll start with a scenario where we don’t have QoS configured.

Without Congestion

First, we’ll see what kind of bitrate we can get. I’ll use this on the client:

ubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5202 -u -b 150M -t 10

Connecting to host 192.168.2.2, port 5202

[ 5] local 192.168.1.1 port 51045 connected to 192.168.2.2 port 5202

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 17.9 MBytes 150 Mbits/sec 12938

[ 5] 1.00-2.00 sec 17.9 MBytes 150 Mbits/sec 12949

[ 5] 2.00-3.00 sec 17.9 MBytes 150 Mbits/sec 12948

[ 5] 3.00-4.00 sec 17.9 MBytes 150 Mbits/sec 12949

[ 5] 4.00-5.00 sec 17.9 MBytes 150 Mbits/sec 12950

[ 5] 5.00-6.00 sec 17.9 MBytes 150 Mbits/sec 12948

[ 5] 6.00-7.00 sec 17.9 MBytes 150 Mbits/sec 12949

[ 5] 7.00-8.00 sec 17.9 MBytes 150 Mbits/sec 12949

[ 5] 8.00-9.00 sec 17.9 MBytes 150 Mbits/sec 12949

[ 5] 9.00-10.00 sec 17.9 MBytes 150 Mbits/sec 12949

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 179 MBytes 150 Mbits/sec 0.000 ms 0/129478 (0%) sender

[ 5] 0.00-10.11 sec 115 MBytes 95.2 Mbits/sec 0.190 ms 46396/129472 (36%) receiverLet me explain those parameters:

-c 192.168.2.2: This is the IP address of our server (H2).-p 5202: The port number of the server.-u: we want to use UDP instead of TCP.-b 150M: generate 150 Mbits/sec of traffic.-t 10: transmit for 10 seconds.

The client transmits 150 Mbits/sec, and the server receives 95.2 Mbits/sec. That makes sense because our bottleneck is that 100 Mbits/sec interface.

Let’s try generating 30 Mbits/sec traffic where packets are marked with DSCP EF:

ubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5201 -u -b 30M --tos 184 -t 10

Connecting to host 192.168.2.2, port 5201

[ 5] local 192.168.1.1 port 42278 connected to 192.168.2.2 port 5201

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 3.57 MBytes 30.0 Mbits/sec 2588

[ 5] 1.00-2.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 2.00-3.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 3.00-4.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 4.00-5.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 5.00-6.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 6.00-7.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 7.00-8.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 8.00-9.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 9.00-10.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 35.8 MBytes 30.0 Mbits/sec 0.000 ms 0/25896 (0%) sender

[ 5] 0.00-10.04 sec 35.8 MBytes 29.9 Mbits/sec 0.218 ms 0/25896 (0%) receiverBy using --tos 184 we set the ToS byte to 184 (decimal), which equals DSCP EF. Because there is no congestion, all packets make it to the server. Let’s also try this with 80 Mbits/sec of unmarked traffic:

ubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5202 -u -b 80M -t 10

Connecting to host 192.168.2.2, port 5202

[ 5] local 192.168.1.1 port 33149 connected to 192.168.2.2 port 5202

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 9.53 MBytes 79.9 Mbits/sec 6900

[ 5] 1.00-2.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 2.00-3.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 3.00-4.00 sec 9.54 MBytes 80.0 Mbits/sec 6907

[ 5] 4.00-5.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 5.00-6.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 6.00-7.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 7.00-8.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 8.00-9.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 9.00-10.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 95.4 MBytes 80.0 Mbits/sec 0.000 ms 0/69055 (0%) sender

[ 5] 0.00-10.04 sec 95.3 MBytes 79.6 Mbits/sec 0.204 ms 10/69055 (0.014%) receiverThere is no congestion, so all packets make it.

With Congestion

Let’s see what happens when there is congestion. I’ll generate 30 Mbits/sec of DSCP EF traffic and 80 Mbits/sec of unmarked traffic. I’ll run both commands at the same time:

ubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5201 -u -b 30M --tos 184 -t 10

Connecting to host 192.168.2.2, port 5201

[ 5] local 192.168.1.1 port 48526 connected to 192.168.2.2 port 5201

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 3.57 MBytes 30.0 Mbits/sec 2588

[ 5] 1.00-2.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 2.00-3.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 3.00-4.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 4.00-5.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 5.00-6.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 6.00-7.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 7.00-8.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 8.00-9.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 9.00-10.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 35.8 MBytes 30.0 Mbits/sec 0.000 ms 0/25896 (0%) sender

[ 5] 0.00-10.11 sec 26.2 MBytes 21.7 Mbits/sec 0.156 ms 6943/25896 (27%) receiverubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5202 -u -b 80M -t 10

Connecting to host 192.168.2.2, port 5202

[ 5] local 192.168.1.1 port 58261 connected to 192.168.2.2 port 5202

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 9.53 MBytes 79.9 Mbits/sec 6900

[ 5] 1.00-2.00 sec 9.54 MBytes 80.0 Mbits/sec 6907

[ 5] 2.00-3.00 sec 9.54 MBytes 80.0 Mbits/sec 6905

[ 5] 3.00-4.00 sec 9.54 MBytes 80.0 Mbits/sec 6907

[ 5] 4.00-5.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 5.00-6.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 6.00-7.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 7.00-8.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 8.00-9.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 9.00-10.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 95.4 MBytes 80.0 Mbits/sec 0.000 ms 0/69055 (0%) sender

[ 5] 0.00-10.06 sec 89.1 MBytes 74.3 Mbits/sec 0.193 ms 4538/69054 (6.6%) receiverNow we are trying to squeeze 30 Mbits and 80 Mbits of traffic through a 100 Mbit interface which results in packet loss. We see 21.7 Mbits/sec of DSCP EF traffic and 74.3 Mbits/sec of unmarked traffic. Those two add up to 96 Mbits/sec, which is about the maximum bitrate we can get.

With Priority Queue

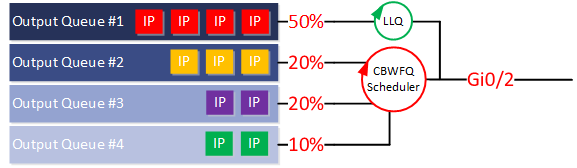

Let’s configure a priority queue to see how it impacts our bitrates and packet drops. I’ll configure a class-map that matches DSCP EF and a policy map with a priority queue for 20 Mbits/sec:

R1(config)#class-map DSCP_EF

R1(config-cmap)#match dscp efR1(config)#policy-map LLQ

R1(config-pmap)#class DSCP_EF

R1(config-pmap-c)#priority 20000R1(config)#interface GigabitEthernet 0/0/1

R1(config-if)#service-policy output LLQHere’s what it looks like:

R1#show policy-map interface GigabitEthernet 0/0/1

GigabitEthernet0/0/1

Service-policy output: LLQ

queue stats for all priority classes:

Queueing

queue limit 512 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 0/0

Class-map: DSCP_EF (match-all)

0 packets, 0 bytes

5 minute offered rate 0000 bps, drop rate 0000 bps

Match: dscp ef (46)

Priority: 20000 kbps, burst bytes 500000, b/w exceed drops: 0

Class-map: class-default (match-any)

0 packets, 0 bytes

5 minute offered rate 0000 bps, drop rate 0000 bps

Match: any

queue limit 416 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 0/0We now have a 20 Mbps/sec priority queue for DSCP EF marked traffic, and everything else ends up in the class-default class.

Without Congestion

Let’s see what happens when there is no congestion. I’ll generate 30 Mbits/sec DSCP EF marked traffic:

ubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5201 -u -b 30M --tos 184 -t 10

Connecting to host 192.168.2.2, port 5201

[ 5] local 192.168.1.1 port 37116 connected to 192.168.2.2 port 5201

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 3.57 MBytes 30.0 Mbits/sec 2588

[ 5] 1.00-2.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 2.00-3.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 3.00-4.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 4.00-5.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 5.00-6.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 6.00-7.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 7.00-8.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 8.00-9.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 9.00-10.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 35.8 MBytes 30.0 Mbits/sec 0.000 ms 0/25896 (0%) sender

[ 5] 0.00-10.04 sec 35.8 MBytes 29.9 Mbits/sec 0.217 ms 0/25896 (0%) receiverThere is no congestion, so we can get a higher bitrate than what we configured with the priority command. Let’s check the router output:

R1#show policy-map interface GigabitEthernet 0/0/1

GigabitEthernet0/0/1

Service-policy output: LLQ

queue stats for all priority classes:

Queueing

queue limit 512 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 25896/38585040

Class-map: DSCP_EF (match-all)

25896 packets, 38585040 bytes

5 minute offered rate 981000 bps, drop rate 0000 bps

Match: dscp ef (46)

Priority: 20000 kbps, burst bytes 500000, b/w exceed drops: 0

Class-map: class-default (match-any)

20 packets, 2094 bytes

5 minute offered rate 0000 bps, drop rate 0000 bps

Match: any

queue limit 416 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 19/1677We see many packets that match the DSCP_EF class map and no packet drops because they exceeded the bandwidth.

With Congestion

Now, let’s see what happens when there is congestion. I’ll start those two packet streams of 30 Mbits/sec DSCP EF traffic and 80 Mbits/sec unmarked traffic at the same time:

ubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5201 -u -b 30M --tos 184 -t 10

Connecting to host 192.168.2.2, port 5201

[ 5] local 192.168.1.1 port 59719 connected to 192.168.2.2 port 5201

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 3.57 MBytes 30.0 Mbits/sec 2588

[ 5] 1.00-2.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 2.00-3.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 3.00-4.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 4.00-5.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 5.00-6.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 6.00-7.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 7.00-8.00 sec 3.58 MBytes 30.0 Mbits/sec 2589

[ 5] 8.00-9.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

[ 5] 9.00-10.00 sec 3.58 MBytes 30.0 Mbits/sec 2590

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 35.8 MBytes 30.0 Mbits/sec 0.000 ms 0/25896 (0%) sender

[ 5] 0.00-10.28 sec 24.2 MBytes 19.7 Mbits/sec 0.113 ms 8406/25895 (32%) receiverubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5202 -u -b 80M -t 10

Connecting to host 192.168.2.2, port 5202

[ 5] local 192.168.1.1 port 51758 connected to 192.168.2.2 port 5202

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 9.53 MBytes 79.9 Mbits/sec 6900

[ 5] 1.00-2.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 2.00-3.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 3.00-4.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 4.00-5.00 sec 9.54 MBytes 80.0 Mbits/sec 6907

[ 5] 5.00-6.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 6.00-7.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 7.00-8.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 8.00-9.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 9.00-10.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 95.4 MBytes 80.0 Mbits/sec 0.000 ms 0/69055 (0%) sender

[ 5] 0.00-10.05 sec 91.2 MBytes 76.2 Mbits/sec 0.232 ms 2982/69053 (4.3%) receiverNow, we see that the priority queue has a bitrate of 19.7 Mbits/sec, with 32% packet loss. Our unmarked traffic has a bitrate of 76.2 Mbits/sec, with some packet loss.

This looks good, we have a priority queue but it’s limited to 20 Mbits/sec and it doesn’t starve our CBWFQ class default queue. You can see the DSCP EF packet drops here:

R1#show policy-map interface GigabitEthernet 0/0/1

GigabitEthernet0/0/1

Service-policy output: LLQ

queue stats for all priority classes:

Queueing

queue limit 512 packets

(queue depth/total drops/no-buffer drops) 0/10404/0

(pkts output/bytes output) 23209/34581410

Class-map: DSCP_EF (match-all)

33613 packets, 50083370 bytes

5 minute offered rate 991000 bps, drop rate 306000 bps

Match: dscp ef (46)

Priority: 20000 kbps, burst bytes 500000, b/w exceed drops: 10404

Class-map: class-default (match-any)

102994 packets, 153419717 bytes

5 minute offered rate 3058000 bps, drop rate 108000 bps

Match: any

queue limit 416 packets

(queue depth/total drops/no-buffer drops) 0/3705/0

(pkts output/bytes output) 99286/147902287Because of congestion, our DSCP EF priority queue traffic has packet drops. These are packets that exceeded the 20 Mbits/sec priority queue bitrate.

We’ll do one more test. Let’s turn it up a notch and increase DSCP EF traffic to 300 Mbit/sec while simultaneously sending 80 Mbits/sec of unmarked traffic:

ubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5201 -u -b 300M --tos 184 -t 10

Connecting to host 192.168.2.2, port 5201

[ 5] local 192.168.1.1 port 42122 connected to 192.168.2.2 port 5201

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 35.7 MBytes 300 Mbits/sec 25876

[ 5] 1.00-2.00 sec 35.8 MBytes 300 Mbits/sec 25898

[ 5] 2.00-3.00 sec 35.8 MBytes 300 Mbits/sec 25897

[ 5] 3.00-4.00 sec 35.8 MBytes 300 Mbits/sec 25897

[ 5] 4.00-5.00 sec 35.8 MBytes 300 Mbits/sec 25899

[ 5] 5.00-6.00 sec 35.8 MBytes 300 Mbits/sec 25897

[ 5] 6.00-7.00 sec 35.8 MBytes 300 Mbits/sec 25898

[ 5] 7.00-8.00 sec 35.8 MBytes 300 Mbits/sec 25898

[ 5] 8.00-9.00 sec 35.8 MBytes 300 Mbits/sec 25898

[ 5] 9.00-10.00 sec 35.8 MBytes 300 Mbits/sec 25898

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 358 MBytes 300 Mbits/sec 0.000 ms 0/258956 (0%) sender

[ 5] 0.00-10.06 sec 28.0 MBytes 23.3 Mbits/sec 0.177 ms 238705/258946 (92%) receiverubuntu@H1:~$ iperf3 -c 192.168.2.2 -p 5202 -u -b 80M -t 10

Connecting to host 192.168.2.2, port 5202

[ 5] local 192.168.1.1 port 60708 connected to 192.168.2.2 port 5202

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 9.53 MBytes 79.9 Mbits/sec 6900

[ 5] 1.00-2.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 2.00-3.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 3.00-4.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 4.00-5.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 5.00-6.00 sec 9.54 MBytes 80.0 Mbits/sec 6907

[ 5] 6.00-7.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 7.00-8.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 8.00-9.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

[ 5] 9.00-10.00 sec 9.54 MBytes 80.0 Mbits/sec 6906

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 95.4 MBytes 80.0 Mbits/sec 0.000 ms 0/69055 (0%) sender

[ 5] 0.00-10.56 sec 91.0 MBytes 72.3 Mbits/sec 5.651 ms 3154/69054 (4.6%) receiverEven with this massive amount of DSCP EF traffic, we don’t have any issues. Our priority queue is limited to 20 Mbits/sec, and the unmarked traffic in the class-default queue has a 72.3 Mbits/sec bitrate. This proves that our priority queue doesn’t starve our CBWFQ queue(s). Let’s look at the packet drops on the router: