Lesson Contents

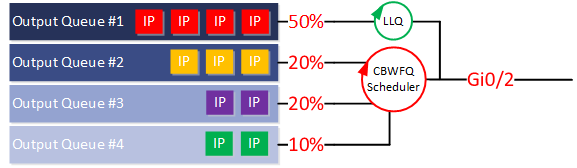

LLQ (Low Latency Queuing) is an extension of CBWFQ (Class Based Weighted Fair Queuing) where we add a priority queue to the scheduler. Here’s an illustration to help you visualize this:

In the picture above, output queue 2,3 and 4 are attached to the regular CBWFQ scheduler and are served in a (weighted) round robin fashion. Queue 1 however, is attached to the LLQ scheduler and bypasses the CBWFQ scheduler. This turns queue 1 into a priority queue. Everything that ends up in queue 1 will be served before any other the other queues.

Configuration

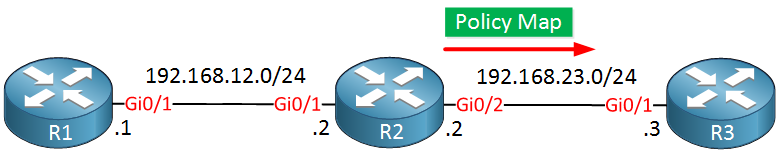

To demonstrate this, I will use the following topology:

All we need are three routers to demonstrate LLQ. R1 will send some packets to R3, R2 in the middle will do the queuing.

Configurations

Want to take a look for yourself? Here you will find the startup configuration of each device.

R1

hostname R1

!

ip cef

!

interface GigabitEthernet0/1

ip address 192.168.12.1 255.255.255.0

!

ip route 0.0.0.0 0.0.0.0 192.168.12.2

!

endR2

hostname R2

!

ip cef

!

interface GigabitEthernet0/1

ip address 192.168.12.2 255.255.255.0

!

interface GigabitEthernet0/2

ip address 192.168.23.2 255.255.255.0

!

endR3

hostname R3

!

ip cef

!

interface GigabitEthernet0/1

ip address 192.168.23.3 255.255.255.0

!

ip route 0.0.0.0 0.0.0.0 192.168.23.2

!

endFirst, we need a class-map to classify the traffic that you want to put in the priority queue. I will create two class-maps. One for VOICE traffic (marked with DSCP ef) and another one for CALL_SIGNALING that is marked with cs3. The voice traffic will end up in the priority queue, the call signaling traffic will be served by CBWFQ.

R2(config)#class-map VOICE

R2(config-cmap)#match dscp ef

R2(config)#class-map CALL_SIGNALING

R2(config-cmap)#match dscp cs3Now we can create a policy-map and create a priority queue for the voice class-map:

R2(config)#policy-map LLQ

R2(config-pmap)#class VOICER2(config-pmap-c)#priority ?

<1-2000000> Kilo Bits per second

level Multi-Level Priority Queue

percent % of total bandwidth

<cr>Depending on your hardware, you might have two or three options. You can configure a priority queue for a certain kilobit per second rate or a percentage of the bandwidth. The third option on my router is the multi-level priority queue. Most routers will only allow one priority queue, but in this case, I can create two priority queues (high and low). This can be useful if, for example, you have real-time voice and video traffic and you want to prioritize both. With a single priority queue, you can’t decide if voice or video traffic should be prioritized first. Within the priority queue, life is best effort.

With a high and low priority queue, you can ensure that both are prioritized, but one priority queue is still prioritized over the other.

Let’s keep it simple. I will create a priority queue for 2000 Kbps:

R2(config-pmap-c)#priority 2000

R2(config-pmap-c)#exitFor our signaling traffic, we’ll configure a bandwidth guarantee of 1000 Kbps:

R2(config-pmap)#class CALL_SIGNALING

R2(config-pmap-c)#bandwidth 1000Last but not least, let’s activate the policy map on our interface:

R2(config)#interface GigabitEthernet 0/2

R2(config-if)#service-policy output LLQThat’s all we have to do…

Verification

Let’s verify our work. Let’s take a look at our class-maps:

R2#show class-map

Class Map match-all CALL_SIGNALING (id 2)

Match dscp cs3 (24)

Class Map match-any class-default (id 0)

Match any

Class Map match-all VOICE (id 1)

Match dscp ef (46)Above, we can see the two class-maps I created. The class-default class is always there. Traffic that is not matched by one of your class-maps will end up in the class-default class. Here’s the policy-map:

R2#show policy-map

Policy Map LLQ

Class VOICE

priority 2000 (kbps)

Class CALL_SIGNALING

bandwidth 1000 (kbps)Let’s generate some traffic and see what happens. I can use some simple pings for this, but I will have to set the ToS byte. The ping command only accepts a ToS parameter in decimal, so I’ll have to do some conversion:

- DSCP EF (46) is 101110 in binary, that’s 184 in decimal.

- DSCP CS3 (24) is 011000 in binary, that’s 96 in decimal.

Let’s send those pings:

R1#ping 192.168.23.3 tos 184 repeat 100

Type escape sequence to abort.

Sending 100, 100-byte ICMP Echos to 192.168.23.3, timeout is 2 seconds:

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Success rate is 100 percent (100/100), round-trip min/avg/max = 5/6/11 msR1#ping 192.168.23.3 tos 96 repeat 200

Type escape sequence to abort.

Sending 200, 100-byte ICMP Echos to 192.168.23.3, timeout is 2 seconds:

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Success rate is 100 percent (200/200), round-trip min/avg/max = 2/6/16 msLet’s see if our traffic matched:

R2#show policy-map interface GigabitEthernet 0/2

GigabitEthernet0/2

Service-policy output: LLQ

queue stats for all priority classes:

Queueing

queue limit 64 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 100/11400

Class-map: VOICE (match-all)

100 packets, 11400 bytes

5 minute offered rate 0000 bps, drop rate 0000 bps

Match: dscp ef (46)

Priority: 2000 kbps, burst bytes 50000, b/w exceed drops: 0

Class-map: CALL_SIGNALING (match-all)

200 packets, 22800 bytes

5 minute offered rate 0000 bps, drop rate 0000 bps

Match: dscp cs3 (24)

Queueing

queue limit 64 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 200/22800

bandwidth 1000 kbps

Class-map: class-default (match-any)

7 packets, 724 bytes

5 minute offered rate 0000 bps, drop rate 0000 bps

Match: any

queue limit 64 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 7/724There we go, you can see that my packets ended up in the correct queues. Keep in mind that as long as there is no congestion, both classes can get more bandwidth.

Configurations

Want to take a look for yourself? Here you will find the final configuration of each device.

R1

hostname R1

!

ip cef

!

interface GigabitEthernet0/1

ip address 192.168.12.1 255.255.255.0

!

ip route 0.0.0.0 0.0.0.0 192.168.12.2

!

endR2

hostname R2

!

ip cef

!

class-map match-all CALL_SIGNALING

match dscp cs3

class-map match-all VOICE

match dscp ef

!

policy-map LLQ

class VOICE

priority 2000

class CALL_SIGNALING

bandwidth 1000

!

interface GigabitEthernet0/1

ip address 192.168.12.2 255.255.255.0

!

interface GigabitEthernet0/2

ip address 192.168.23.2 255.255.255.0

service-policy output LLQ

!

endR3

hostname R3

!

ip cef

!

interface GigabitEthernet0/1

ip address 192.168.23.3 255.255.255.0

!

ip route 0.0.0.0 0.0.0.0 192.168.23.2

!

endConclusion

You have now learned how to configure LLQ on a Cisco IOS router.

Hello Rene,

Couple of questions.

-

-

... Continue reading in our forumIs it possible to provide me an example to show the difference between congestion management and congestion avoidance. As a matter of fact, I do not understand TCP Global Synchronization very well. It will be great if you use an example to explain congestion management and avoidance by relating to TCP Global Synchronization.

This question is basically based upon Priority command VS Bandwidth command. Priority command is used to ensure the maximum bandwidth during the congestion. On the other hand, Bandwidth is used to ensure

Hi Azm,

Congestion management is about dealing with congestion when it occurs and congestion avoidance is trying to avoid congestion. To understand congestion avoidance, you have to think about how the TCP window size and global synchronization works. I explained this in this lesson:

https://networklessons.com/cisco/ccnp-route/tcp-window-size-scaling

Congestion avoidance works by dropping certain TCP segments so that the window size reduces, slowing down the TCP transmission and by doing so, preventing congestion from happening.

The difference between priority

... Continue reading in our forumHello Rene,

Thanks a lot for your explanation. However, still needs some more clarification. Lets’ say I have an interface GIg 0/1 that is capable of handling 100 packets per second. I have three different kinds of traffic passing through the interface. They are Traffic A, B and C.

And the QoS is configured like this:

Traffic A: Priority 50 packets

Traffic B: Bandwidth 25 packets

Traffic C: Bandwidth 25 packets

I am just using packets per second instead of MB or KB per second.

As far as my understanding goes, QoS will work like this:

#Every second the interface

... Continue reading in our forumHello Azm

In your example, we assume that Traffic A has been configured to be attached to an LLQ scheduler, and that is what you mean by priority traffic. Also, we assume that Traffic B and C are functioning in a

... Continue reading in our forumHello Laz,

That was my question and that is the answer I was looking for. Thank you so much as usual.

Azm