Lesson Contents

In a DMVPN network it’s likely that your spoke routers are connected using a variety of connections. You might be using DSL, cable or wireless connections on different sites.

With all these different connections, it’s not feasible to use a single QoS policy and apply it to all spoke routers. You also don’t want to create a unique QoS policy for each router if you have hundreds of spoke routers.

Per-Tunnel QoS allows us to create different QoS policies and we can apply them to different NHRP “groups”. Multiple spoke routers can be assigned to the same group but each router will be individually measured.

For example, let’s say we have 300 spoke routers:

- 100 spoke routers connected through DSL (1 Mbps).

- 100 spoke routers connected through Cable (5 Mbps).

- 100 spoke routers connected through Wireless (2 Mbps).

In this case we can create three QoS policies, one for DSL, cable and wireless. In each QoS policy we will configure the correct shaping rate so our spoke routers don’t get overburdened with traffic. When we apply one of the QoS policies, traffic to each spoke router will be shaped to the correct rate.

Let’s take a look at the configuration so you can see how this works…

Configuration

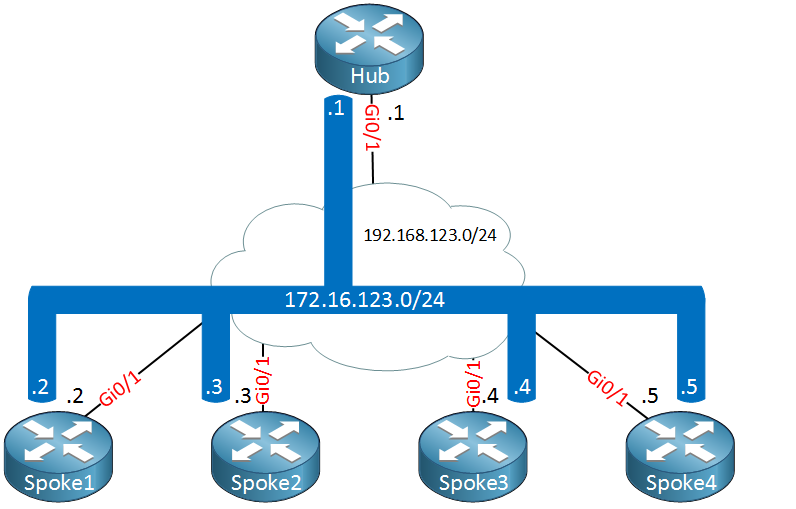

To demonstrate DMVPN Per-Tunnel QoS I will use the following topology:

Above we have a hub and four spoke routers. Let’s imagine that the spoke1 and spoke2 routers are connected using a 5 Mbps link, the spoke3 and spoke4 routers are using a slower 1 Mbps link.

Our DMVPN network is used for VoIP traffic so our QoS policy should have a priority queue for RTP traffic.

We use subnet 192.168.123.0/24 as the “underlay” network and 172.16.123.0/24 as the “overlay” (tunnel) network.

The DMVPN network is used for VoIP traffic

Let’s start with a basic DMVPN phase 2 configuration. Here’s the configuration of the hub router:

Hub#

interface Tunnel0

ip address 172.16.123.1 255.255.255.0

ip nhrp authentication DMVPN

ip nhrp map multicast dynamic

ip nhrp network-id 1

tunnel source GigabitEthernet0/1

tunnel mode gre multipointendAnd here’s the configuration of one spoke router:

Spoke1#

interface Tunnel0

ip address 172.16.123.2 255.255.255.0

ip nhrp authentication DMVPN

ip nhrp map 172.16.123.1 192.168.123.1

ip nhrp map multicast 192.168.123.1

ip nhrp network-id 1

ip nhrp nhs 172.16.123.1

tunnel source GigabitEthernet0/1

tunnel mode gre multipointendThe configuration above is the same on all spoke routers except for the IP address.

Let’s start with the hub configuration…

Hub Configuration

Let’s create a class-map and policy-map that gives priority to VoIP traffic:

Hub(config)#class-map VOIP

Hub(config-cmap)#match protocol rtpI’ll use NBAR to identify our RTP traffic. Here’s the policy-map:

Hub(config)#policy-map PRIORITY

Hub(config-pmap)#class VOIP

Hub(config-pmap-c)#priority percent 20In the policy-map we will create a priority queue for 20 percent of the interface bandwidth. Instead of applying this policy-map to an interface, we’ll create two more policy-maps. The first one will be for our spoke routers that are connected through the 1 Mbps connections:

Hub(config)#policy-map SHAPE_1M

Hub(config-pmap)#class class-default

Hub(config-pmap-c)#shape average 1m

Hub(config-pmap-c)#service-policy PRIORITYIn the policy-map above called “SHAPE_1M” we shape all traffic in the class-default class-map to 1 Mbps. Within the shaper, we apply the policy map that creates a priority queue for 20% of the bandwidth. 20% of 1 Mbps is 200 kbps.

Let’s create the second policy-map for our spoke routers that are connected through a 5 Mbps connection:

Hub(config)#policy-map SHAPE_5M

Hub(config-pmap)#class class-default

Hub(config-pmap-c)#shape average 5M

Hub(config-pmap-c)#service-policy PRIORITYThe policy-map is the same except that it will shape up to 5 Mbps. The priority queue will get 20% of 5 Mbps which is 1 Mbps.

We have our policy-maps but we still have to apply them somehow. We don’t apply them to an interface but we will map them to NHRP groups:

Hub(config)#interface Tunnel 0

Hub(config-if)#nhrp ?

attribute NHRP attribute

event-publisher Enable NHRP smart spoke feature

group NHRP group name

map Map group name to QoS service policy

route-watch Enable NHRP route watchOn the tunnel interface we can use the nhrp map command. Take a look below:

Hub(config-if)#nhrp map ?

group NHRP group mappingLet’s use the group parameter:

Hub(config-if)#nhrp map group ?

WORD NHRP group nameNow you can specify a NHRP group name. You can use any name but to keep it simple, it’s best to use the same name as the policy-maps that we created earlier:

Hub(config-if)#nhrp map group SHAPE_1M ?

service-policy QoS service-policyThe only thing left to do is to attach the NHRP group to the policy-map:

Thanks for the great article–very timely since I recently finished my DMVPN studies, and I am reviewing QoS! Also, I like your new feature towards the bottom that allows you to click on a device and see its config–cool stuff.

One limitation of this solution seems to be that it works only in DMVPN phase 1, which is now obsolete. As soon as there is spoke-to-spoke traffic, your QoS settings will be lost. Maybe one day there will be a “phase 4” where the spokes will pull down, and apply locally, any group settings from the Hub.

–Andrew

Hi Andrew,

Glad to hear you like it!

Per-tunnel QoS is a “hub to spoke” solution. It seems there is a spoke-to-spoke solution though, I just found this on the Cisco website:

https://www.cisco.com/c/en/us/td/docs/ios-xml/ios/sec_conn_dmvpn/configuration/xe-16/sec-conn-dmvpn-xe-16-book.html

... Continue reading in our forumhi rene i’m about to start my ccnp switch having just passed my ccnp route exam and i’d like to know if your ccnp switch section covers everything that is going to be thrown at me when I take the exam as I’ve already got the official cisco exam book and it doesn’t cover all the topics for it’s own exam it was the same with there ccnp route book which I find out the hard way when I failed my ccnp route exam the first time after see questions about stuff that the official book covered with just 2 or 3 lines and no configuration examples please help as I just want

... Continue reading in our forumOf course, I will defer to what Rene says, but I believe it is unrealistic to expect any one source to be comprehensive for a CCNP level exam. I think your best bet is to use a variety of sources: videos, books, Cisco’s site, and labs that are available.

One of the best books I picked up for the entire CCNP process was Cisco CCNP Switch Simplified. The book is massive, and the form factor is hilariously large (given “Simplified” in the title). What really makes this title shine are the many, many high quality labs–so with one resource you get both reference

... Continue reading in our forumHi Shaun,

I agree with wat Andrew says here. I’d say the content here is 99% complete. There’s a few minor topics that I still want to add (POE, RPR, SSO).

Rene